Adventures with Airflow

This is what Midjourny thinks MLOps looks like...

Why Airflow

Coming off of my recent stint at Amazon, I had plenty of time to get familiar with the tools provided by AWS. That said, some of the industry standards outside of Amazon were superceded by proprietary tools in our internal tech stack. The ones whose absence I felt the most were things like Airflow and Papermill. Two tools which can make model training and monitoring much easier to productionalize if you’re willing to put in a little groundwork beforehand .

I decided that my work as part of the FastAI course would be a great time to get hands on experience with the tool and see how it compares to the tools I’m used to.

Setup

Airflow is open source and incredibly popular in the data engineering community. As a result, a rich ecosystem of tools and users has built up around it. For my experiment, I used one of those tools (Astronomer’s astrocli) instead of the vanilla airflow setup. Astro is recommended as a syntatic sugar, of sorts, for airflow. It containerizes the components that comprise airflow and adds in a few important features which are crucial in production environments:

- Manages Airflow Deployments

- Scaling

- Security (Astronomer includes several security features such as encryption at rest and in transit, role-based access control (RBAC), and single sign-on.

- Improved Monitoring and Logging

- CI/CD Integration

With it, I was able to get a server running in a few minutes and was off to the races.

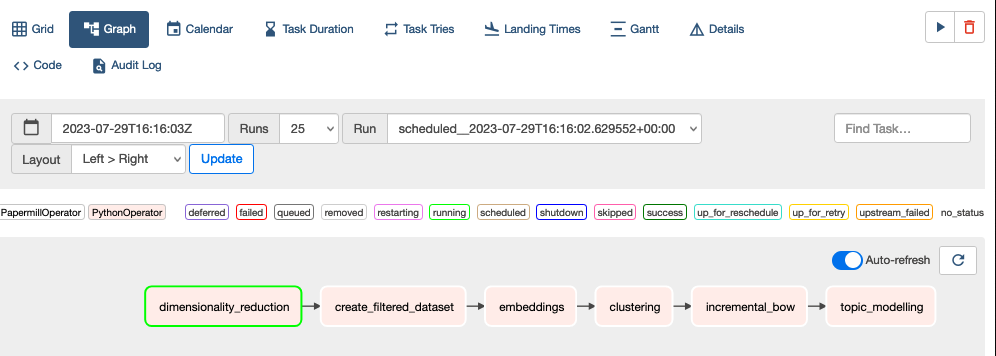

Building a pipeline

I created a simple graph to represent my model training pipeline. This is simply done declaratively in Python. Fun tip, you can just give GPT-4 a vague idea of the order of operations and it will get a near perfect draft out immediately. This is also an easy way to explore configuration options for error handling or dependencies while you’re still getting a hang of everything else that’s new in the service.

Simple DAG, simple service

Like most services, the graphs are comprised of a number of operators which correspond to discrete steps in your model training workflow. These steps can happen in series, parallel, on a schedule and contrary to the name, in a continuous cycle with some database shenanigans.

It’s worth looking through the hooks currently available for the service

For my dag, I used vanilla Python scripts for most of this, and then hooked directly into my paramaterized model training notebook for the final step using papermill (worth talking about in another post)

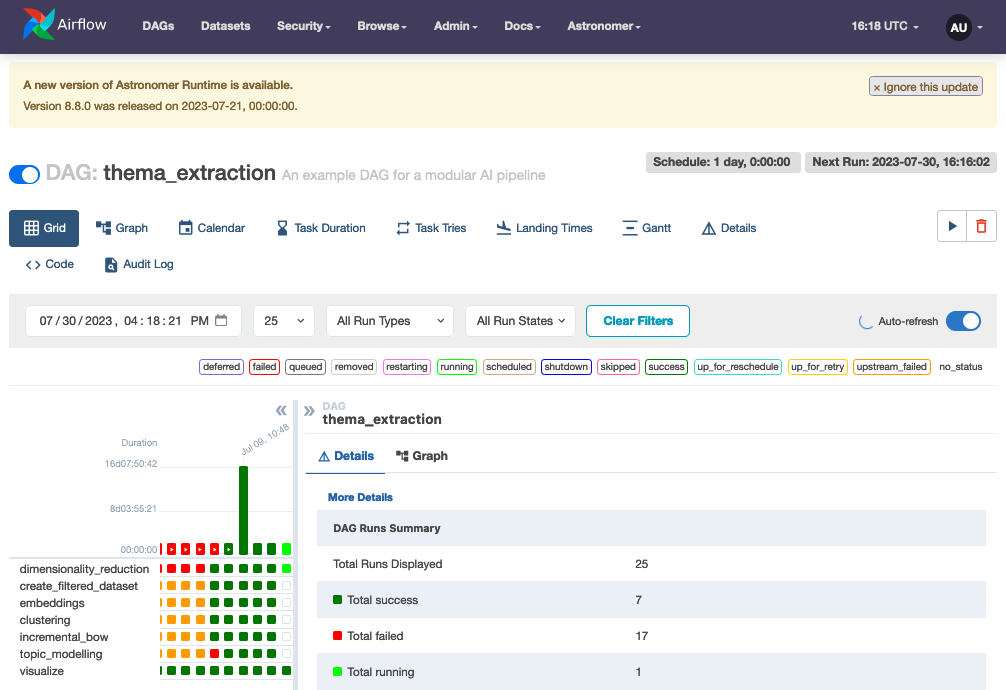

Example Dashboard provided out of the box

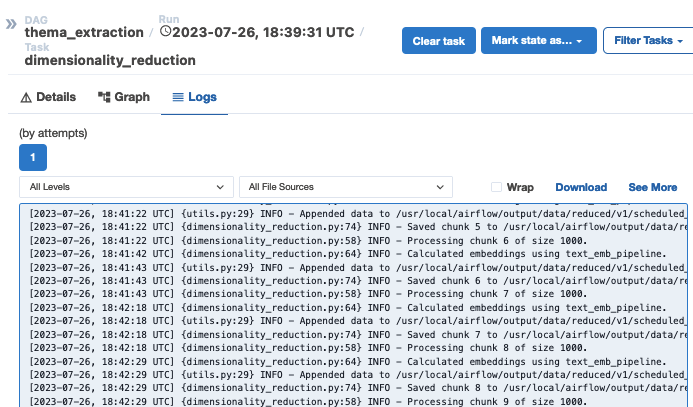

Realtime logs of each step of the pipeline

First impresstions

Airflow has a lot of great things going for it at first glance:

- It’s a state machine on steroids. Eliminates tons of code around exception handling

- It isolates environment/state between each step (fewer cumulative, hard to diagnose bugs!)

- Dockerized, easier to deploy to other environments capable of running Docker

- Useful/customizable UI/Dashboard included out of the box.

- Plugins for all kinds of services (including Papermill) out of the box

Cons:

- Iteration is a bit slow. I haven’t found a good way to hot swap my code packages just yet, so each new update comes with a ‘compilation tax’ or so forth. Will look into this as I get more time.

Regardless, after getting it up and running, Airflow seems to be an incredible tool for any small shops looking to productionalize an airflow quickly. It has a lot of obvious advantages over rolling your own pipeline and seems to be more feature rich that some proprietary options with comparable integrations. Will definitely consider for future work and continue to use on my own projects. With Astro, the overhead of something like airflow pays for itself with the baked in modularity, monitoring and portability of the tool.

Further competitors:

Reading up on the other recommendations for orchestrators outside of Step Functions (Dagster/Luigi/Prefect/Kedro/(Other Cloud Options.)). Most of these were created after airflow and attempt to solve some of the problems percieved with it. If I get the time, I’ll try setting up the same pipeline in Dagster next.

Next Steps.

I’m interested in learning a bit more about Papermill and notebook integration into MLOps pipelines. Netflix popularized this approach a few years ago but I haven’t heard much since. Seems like it could be pretty useful for bridging the gap between production and research workflows while simultaneously effortlessly providing human readable reports of each training run automatically.